Forecast: Replacing Spot-Checks With The Semantic Audit Process (Ref: Stripe Radar)

Sales Ops managers constantly walk a tightrope between velocity and integrity. If we lock down the CRM with too many mandatory fields and validation rules, sales reps revolt, and data quality paradoxically drops because they start mashing keyboards just to bypass the gates. If we remove the guardrails to increase speed, the data swamp expands, and our forecasts lose reliability.

Historically, the compromise has been random sampling or periodic manual cleanups. We accept that a percentage of data will be bad, and we rely on quarterly "spring cleaning" or spot-checks to fix the glaring issues. We do this because manual auditing is expensive—we simply don’t have the human hours to review every single call log, opportunity update, or deal note.

Here is my forecast for the next 6-12 months: The plummeting cost of LLM inference (specifically with models like GPT-4o-mini or Claude Haiku) will make "Sampling" obsolete. Instead of checking 5% of records, we will move to 100% Semantic Coverage.

Just as Stripe Radar evaluates every single transaction for fraud risk in milliseconds, Sales Ops teams will deploy background automation that scores every single CRM interaction for "Data Quality Risk" in real-time. We are shifting from a paradigm of Prevention (rigid UI blockers) to Detection (asynchronous auditing).

The Constraint Shift: Why Now?

Until recently, running a Large Language Model on every SalesForce field update or HubSpot note was cost-prohibitive and slow. You couldn't justify spending $0.05 per record just to check if a rep filled out the "Next Steps" field correctly.

However, with the cost of intelligent tokens racing toward zero, the economic constraint has vanished. It is now financially viable to have an AI "Shadow Auditor" review thousands of updates a day for a few dollars a month. This changes the strategy from "How do I force them to enter data?" to "How do I measure the quality of what they entered?"

The Solution: The Semantic Audit Process

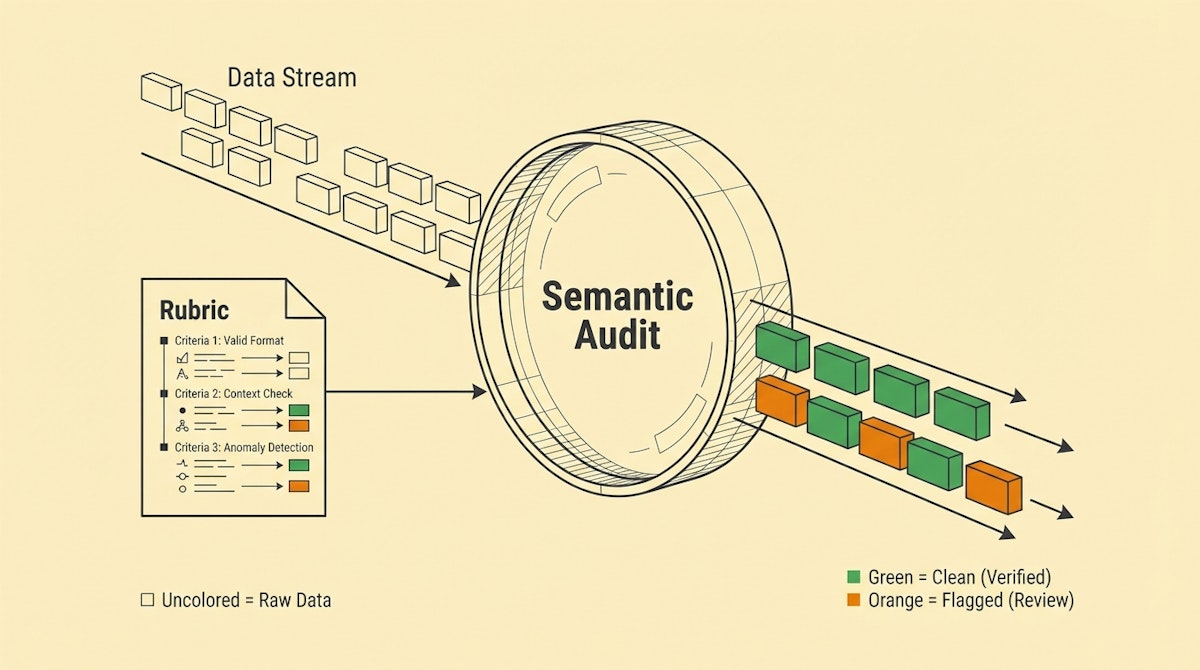

This approach mimics the Stripe Radar architecture: allow the traffic (data) to flow through to minimize friction, but immediately score it and flag anomalies for review.

I have observed that effective implementations of this usually follow a three-step asynchronous flow:

1. The Ingestion (Allow Frictionless Entry)

Let the sales rep save the Opportunity with a vague "Next Step." Do not block the UI. Velocity is key for their buy-in. We treat the CRM save event as a trigger, not a final state.

2. The Semantic Evaluation (The "Radar")

An automation scenario (using Make or n8n) watches for these updates. It sends the new data payload to a low-cost LLM with a specific rubric.

- Input: The text entered by the rep (e.g., "Client is interested, will call back").

- Rubric: "Does this update contain a specific date? Does it mention a stakeholder? Is it actionable?"

- Output: A binary pass/fail or a quality score (0-100).

3. The Governance Router

Based on the score, the automation decides the governance action:

- High Score (>80): Do nothing. The data is clean.

- Low Score (<40): Trigger a Slack alert to the rep: "Hey, I noticed the update on the Acme Deal is a bit vague. Can you add the specific stakeholder name?"

Comparison: Static Validation vs. Semantic Audit

The shift is from deterministic rules (RegEx, required fields) to probabilistic scoring.

| Feature | Static Validation (Old) | Semantic Audit (New) |

|---|---|---|

| Trigger | On Save (Synchronous) | Post-Save (Asynchronous) |

| User Experience | High Friction (Blockers) | Zero Friction (Invisible) |

| Logic | Binary (Filled vs. Empty) | Nuanced (Contextual Quality) |

| Coverage | 100% of Required Fields | 100% of All Context |

Implementing The "Quality Score" Field

To make this actionable, I recommend adding a custom field to your main objects (Lead/Opportunity) called Data_Quality_Score.

You don't need to harass reps for every single error. Instead, you can aggregate this score. If a rep's average Data_Quality_Score drops below a threshold over a week, that’s when a manager steps in for coaching. This moves the conversation from "You didn't fill out this field" to "Your pipeline visibility is trending low."

Conclusion

The goal of governance isn't to create administrative hurdles; it's to ensure the business can trust its data. By leveraging low-cost LLMs to audit 100% of our data stream, we can finally dismantle the "Required Field" bureaucracy. We gain better data fidelity not by forcing compliance, but by measuring it silently and nudging behavior only when necessary.

References

Related posts

Forecast: Shifting From Strict Validation To Schema-On-Read Automation

Review: Nanonets As The Data Integrity Gatekeeper For Financial Automation

Checklist: Adapting Atlassian’s “Definition of Done” For Reliable Growth Automations