Review: Nanonets As The Data Integrity Gatekeeper For Financial Automation

The "Garbage In, Garbage Out" Anxiety

For any Financial Controller, the phrase "automate your accounting" usually triggers a specific anxiety: the fear of corrupting the General Ledger.

We all want to eliminate manual data entry. But we also know that manual entry, for all its flaws, includes a layer of human judgment. A human can spot that an invoice date is obviously wrong or that a vendor name is misspelled. When we talk about automating financial flows—specifically the intake of unstructured documents like PDF invoices or receipts—the biggest hurdle isn't connectivity; it's trust.

In the context of scaling operations (something I grapple with daily at Alegria.group), I see many teams try to solve this with simple "text scrappers" or regex-based tools. They usually fail because the data quality is too low to trust in an automated workflow.

This is where Nanonets enters the conversation. It positions itself not just as an OCR (Optical Character Recognition) tool, but as an intelligent data extraction layer that learns from your corrections.

In this review, I’ll assess Nanonets specifically through the lens of a Financial Controller who prioritizes data integrity over raw speed.

What Is Nanonets in the Automation Stack?

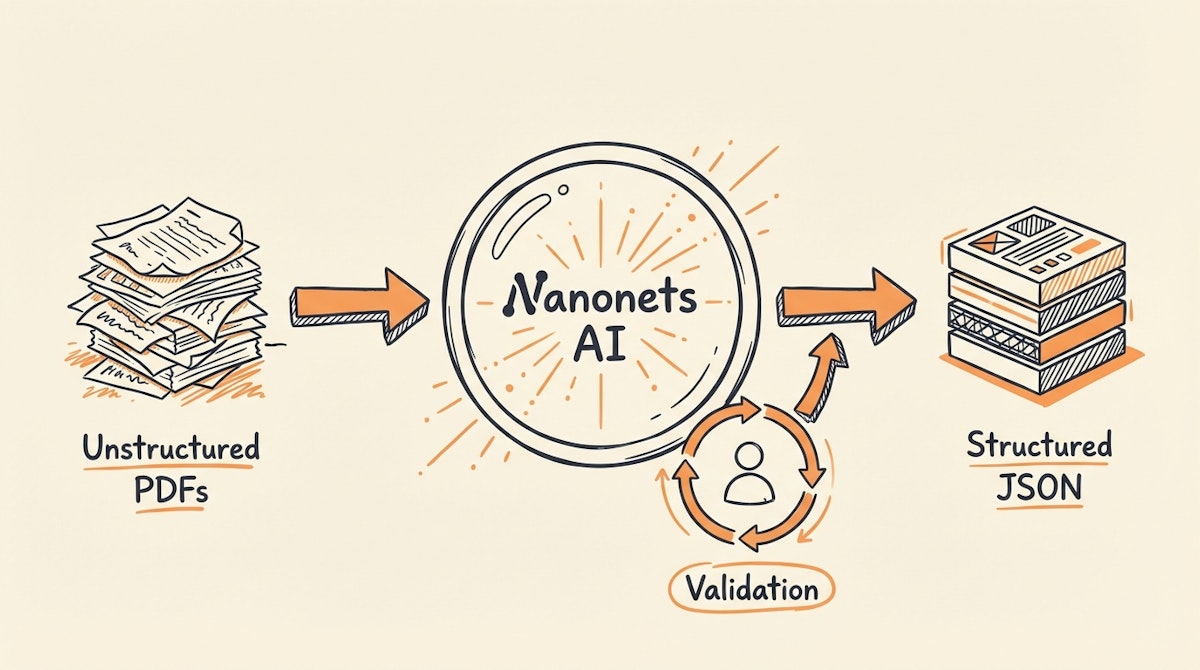

At its core, Nanonets is a machine learning platform designed to extract structured data from unstructured documents. Unlike legacy OCR tools (like ABBYY) that often rely on strict templates or zonal mapping (telling the software exactly where to look for the "Total" field), Nanonets uses deep learning to understand the context of the document.

For a financial automation stack, Nanonets acts as the Refinement Plant.

- Input: Messy, inconsistent PDF invoices via email or upload.

- Process: AI extraction + Human Verification (optional but critical).

- Output: Clean, standardized JSON data sent to Make, Zapier, or your ERP.

The Core Value: The "Human-in-the-Loop" UI

The feature that makes Nanonets particularly relevant for finance is its validation interface.

Most OCR tools represent a "black box"—files go in, data comes out, and if it's wrong, good luck fixing it. Nanonets acknowledges that AI isn't perfect. When the model isn't sure (based on a Confidence Score threshold you set), it flags the document for manual review.

This is the "Draft-and-Verify" approach I often advocate for. The interface allows a junior accountant to quickly tab through the fields on a screen that shows the PDF side-by-side with the extracted data.

Crucially: Every time a human corrects a field, the model learns. This feedback loop is the "Fuel" for long-term automation.

Critical Analysis for the Financial Controller

1. Data Standardization (The Fuel)

The primary pain point for controllers is non-standard data. Vendor A sends "Inv #123", Vendor B sends "Invoice Number: 123".

Nanonets normalizes this. You define a schema (e.g., invoice_date, total_amount, vendor_name). The system forces the chaotic reality of vendor documents into your strict database schema. This is essential before you even think about sending data to Netsuite, Xero, or QuickBooks.

2. The Confidence Score Trigger

This is the most technical but valuable component for automation architects. Nanonets provides a Confidence Score for each field.

In your automation platform (like Make or n8n), you can build logic like this:

- If Confidence > 95%: Post directly to ERP.

- If Confidence < 95%: Send to a Slack channel or an Airtable "Staging Layer" for review.

This capability allows you to automate the "happy path" while safeguarding against errors.

3. Connectivity

Nanonets has a robust API and native integrations with Make and Zapier. This means it fits into a "Composable" stack. You aren't locked into their ecosystem; you can just use them for the extraction heavy lifting and move the data wherever you want.

The Trade-offs

No tool is perfect. Here is what I have observed:

- Cost: It is usage-based and generally more expensive than raw computer vision APIs (like Google Vision or AWS Textract). You are paying for the UI and the pre-trained financial models.

- Setup Time: While they claim "instant", training a model to high accuracy on niche documents takes meaningful volume (50+ samples) and human time for the initial corrections.

Comparative Analysis: Nanonets vs. The Field

| Feature | Legacy OCR (e.g., Zonal) | Generic LLMs (e.g., GPT-4o) | Nanonets |

|---|---|---|---|

| Setup | High (Templates) | Low (Prompting) | Medium (Training) |

| Accuracy on New Layouts | Low (Breaks easily) | High (Generalist) | High (Specialized) |

| Confidence Scoring | Binary | Inconsistent (Hallucinations) | Granular |

| Human UI | Clunky / Desktop | None (Chat interface) | Native / Web-based |

Conclusion: The Verdict

For a Financial Controller, Data Integrity is the north star.

While Generic LLMs (like GPT-4o) are catching up rapidly in their ability to read documents, they currently lack the dedicated workflow features—specifically the Verification UI and the structured Confidence Scores—that a finance team requires for an audit-proof process.

I view Nanonets not just as a tool, but as a necessary "Data Airlock." It ensures that the fuel entering your automation engine is clean, preventing the engine from stalling down the road. If your goal is operational efficiency without sacrificing control, it is a strong investment.