Checklist: Adapting Atlassian’s “Definition of Done” For Reliable Growth Automations

I have often found myself in the “builder’s trap.” I discover a new API endpoint or a clever workaround in Make, and I immediately start assembling the workflow. It is exciting to see the data flow from a form to the CRM instantly. But three months later, when leadership asks if that specific automation actually contributed to the quarter’s revenue, I realize I never set up the tracking to prove it.

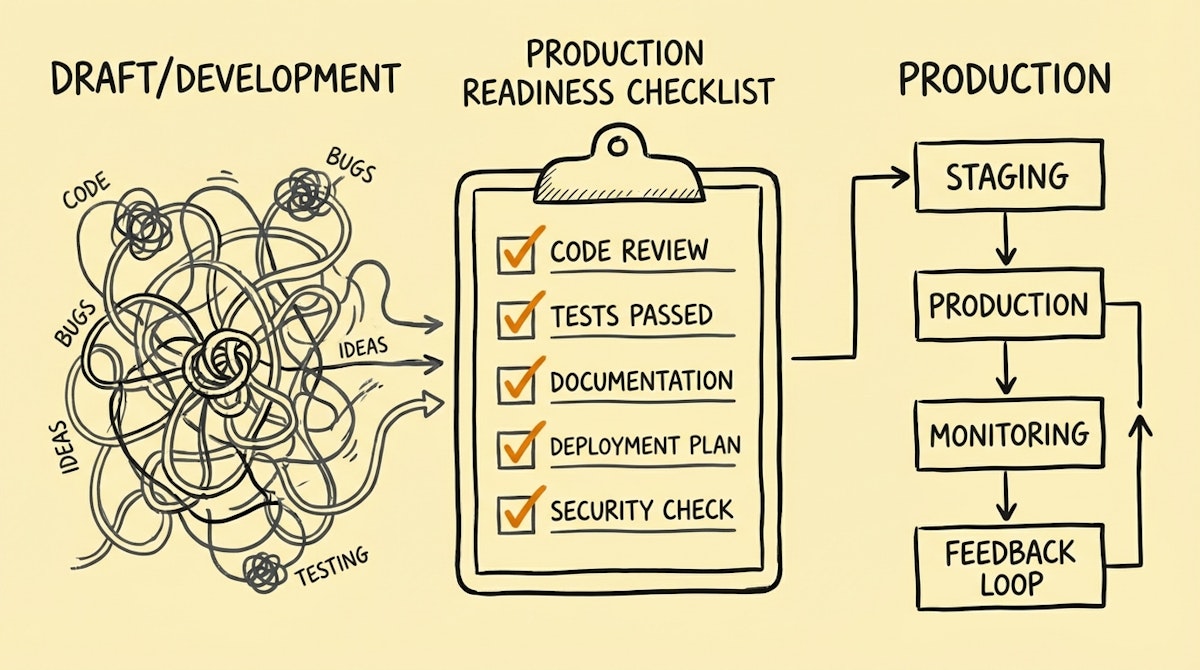

For Growth Engineers, speed is often the primary KPI. We want to iterate on experiments, test new acquisition channels, and optimize conversion rates. However, moving fast without a standard for quality—what Agile developers call a “Definition of Done” (DoD)—creates "Operational Debt." This debt manifests as data silos, broken attribution, and silent failures that erode trust in the systems we build.

Inspired by Atlassian’s approach to engineering rigor, I have outlined a checklist to validate growth automations before they hit production. This isn't just about code quality; it is about ensuring strategic alignment and measurable value.

The “Definition of Done” for Growth Ops

In software engineering, a feature isn't "done" when the code is written; it is done when it is tested, documented, and deployable. For automation, I propose a similar standard. An automation is only "done" when it is observable, resilient, and clearly tied to a business outcome.

Use this checklist as a gatekeeper. If a workflow doesn't tick these boxes, it stays in the draft folder.

Phase 1: Strategic Alignment (The “Why”)

Before opening n8n or Zapier, I force myself to answer these questions. If I cannot, the automation is likely a solution looking for a problem.

- Is the Hypothesis Defined?

- Check: Write down a one-sentence hypothesis (e.g., "Enriching leads with Clearbit will increase email open rates by 5%").

- Why: Prevents building cool tech that drives no business value.

- Is the Success Metric Locked?

- Check: Identify the specific field in the CRM or database that will change to indicate success.

- Why: Without this, you cannot calculate ROI later.

- Is the Executive Sponsor Aware?

- Check: Confirm who owns the P&L for this experiment.

- Why: Ensures you have air cover if the automation accidentally emails the wrong segment.

Phase 2: Data Integrity (The “Input”)

Growth experiments often involve piping data between tools that weren't meant to talk to each other. This is where data leakage happens.

- Is the Unique Identifier Consistent?

- Check: Ensure you are matching records based on a robust ID (e.g.,

user_id,email) rather than fuzzy matches (e.g.,First Name). - Reference: I previously discussed avoiding Preventing CRM Data Decay With The Field-Level Authority Waterfall to handle conflicting data.

- Check: Ensure you are matching records based on a robust ID (e.g.,

- Is Privacy Compliance Handled?

- Check: Verify that PII (Personally Identifiable Information) is handled according to GDPR/CCPA. Are you storing data where you shouldn't?

- Are Fallback Values in Place?

- Check: If an enrichment API returns

null, does the automation break, or does it insert a default value (e.g., "Customer")?

- Check: If an enrichment API returns

Phase 3: Operational Resilience (The “How”)

The most dangerous automations are the ones that fail silently. They look like they are working, but they are dropping 10% of your leads.

- Is Error Handling Configured?

- Check: Every critical step (HTTP requests, database writes) must have a "Create Error Record" path.

- Reference: Implementing Safeguarding Critical Workflows With The Dead Letter Queue Process is essential here.

- Are Rate Limits Respected?

- Check: If you are processing a batch of 10,000 users, have you added a

Sleepmodule or implemented throttling to avoid hitting API caps?

- Check: If you are processing a batch of 10,000 users, have you added a

- Is the Logic Idempotent?

- Check: If the workflow runs twice on the same lead, will it send two emails? It shouldn't.

Phase 4: Value Measurement (The “Outcome”)

This is the step I see skipped most often. If you don't log the execution, you can't prove the value.

- Is the "Action" Logged in a Ledger?

- Check: Create a record in a dedicated

Automation_Logtable (in Airtable or Snowflake) for every successful run. - Reference: See Architecting the Atomic ROI Ledger to Audit Automation Value.

- Check: Create a record in a dedicated

- Is the Source Tagged?

- Check: Update the lead source or campaign field in the destination app (e.g.,

Marketing_Auto_v2).

- Check: Update the lead source or campaign field in the destination app (e.g.,

Assessing Criticality

Not every automation needs a full audit. A Slack notification bot is different from a billing sync. I use a simple matrix to determine how strict the DoD needs to be.

| Risk Level | Requirement | Example |

|---|---|---|

| Low | Basic Error Notification | Internal Slack Alerts |

| Medium | Logs + Retry Logic | Lead Enrichment |

| High | Full Dead Letter Queue + Audit Log | Quote Generation / Billing |

Conclusion

Adopting a "Definition of Done" feels like it slows you down initially. It takes time to build error handlers and logging tables. However, the confidence you gain allows you to scale much faster in the long run.

When you know your growth experiments are built on solid ground, you stop worrying about whether the system is down and start focusing on what the data is telling you. That is where the real strategic alignment happens.