Contrarian: Replacing CRUD Logic With The Immutable Ledger Process

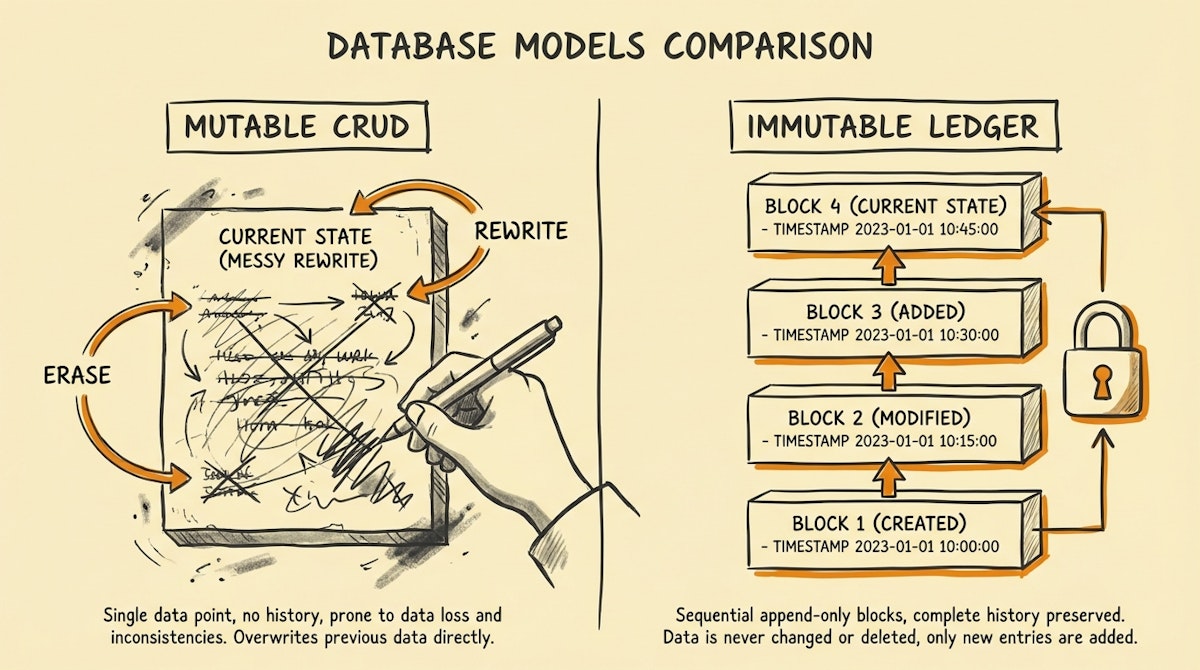

We have been taught that the standard way to interact with a database is CRUD: Create, Read, Update, Delete. For software engineers building consumer apps, this makes sense. But for Data Analysts and Operations professionals responsible for business intelligence, I believe the "U" (Update) and "D" (Delete) are the enemies of integrity.

I have observed countless data teams spend half their week debugging why a dashboard’s numbers shifted overnight. The culprit is almost always a legitimate UPDATE operation that overwrote a previous state, leaving no trace of what the value was before. The data isn't "wrong" per se, but the history is gone, and with it, the context.

There is a better way, often reserved for high-end financial systems or complex software architecture (like Martin Fowler’s Event Sourcing), that we should be applying to our everyday automation workflows: The Immutable Ledger Process.

The Problem With Mutable State

When you overwrite a cell in a spreadsheet or update a record in a CRM, you are committing an act of destruction. You are destroying the past to represent the present.

For an analyst, this is a nightmare scenario for two reasons:

- Loss of Causality: If a Lead Score drops from 90 to 20, a simple update hides the trajectory. Was it a gradual decline or a sudden cliff? You will never know.

- The "He Said, She Said" Loop: When sales ops claims a number was X on Monday, and marketing says it is Y on Tuesday, both are right. But without a history log, the dashboard looks broken.

We typically try to solve this by adding "Last Modified By" fields or complex "History Tracking" features (like Salesforce Field History), but these are often band-aids. They are hard to query and don't export cleanly to BI tools.

The Solution: The Immutable Ledger Process

The contrarian approach here is to stop building automations that update records. Instead, treat your data like a financial ledger. Accountants never erase a transaction; they add a compensating entry to correct it.

In this model, your database (whether it's Postgres, Snowflake, or even Airtable) becomes an Append-Only Log.

Core Principles

- Never Update: If a customer changes their email, you do not overwrite the

emailfield. You insert a new row:event_type: email_changed,new_value: ...,timestamp: .... - Never Delete: If a user is removed, you insert a

status: deletedevent. - State is Derived: The "current state" of any entity is simply the sum (or the latest entry) of all its events.

Implementing The Ledger In Automation

You don't need a blockchain or Kafka to do this. I have seen this successfully implemented using standard low-code tools like Make or n8n feeding into a data warehouse.

1. The Event Stream (Ingestion)

Instead of mapping your form submissions or API webhooks directly to a "Customer Profile" table, map them to an "Events" table.

- Trigger: Webhook from CRM or App.

- Action:

INSERT INTO events_table. - Schema:

entity_id(e.g., Customer ID),event_timestamp,attribute_changed,new_value,source_system.

2. The "Latest State" View (Transformation)

This is where the Data Analyst shines. Instead of trying to maintain a clean table, you write a SQL View (or a transformation step in dbt) that simply grabs the latest record for each ID.

SELECT * FROM events_table

QUALIFY ROW_NUMBER() OVER (PARTITION BY entity_id ORDER BY event_timestamp DESC) = 1

This gives you your "Real-Time Dashboard" view, but underneath it, you have retained 100% of the history.

Analyzing The Trade-offs

Moving from Mutable (CRUD) to Immutable (Ledger) requires a shift in mindset and architecture. Here is how they compare:

| Feature | Mutable State (CRUD) | Immutable Ledger (Append-Only) |

|---|---|---|

| Data Integrity | Low (History is lost) | Absolute (Full audit trail) |

| Debugging | Difficult (Guesswork) | Trivial (Replay the tape) |

| Storage Volume | Static | Linear Growth |

| Complexity | Low (Standard) | Moderate (Requires transformation) |

Conclusion: Trade Space for Sanity

In the past, storage was expensive, so overwriting data saved money. Today, storage in BigQuery or S3 is effectively free compared to the cost of an Analyst's time spent debugging data decay.

By adopting the Immutable Ledger Process, you stop playing detective with your data. You can travel back in time to see exactly what the world looked like last month, and you gain the trust of stakeholders because you can explain every change.

Stop optimizing for storage. Start optimizing for truth.

References

Related posts