Comparing Deterministic Lookups Against Semantic Classification For GL Coding

Table of Contents

- The Month-End Bottleneck

- Contender 1: The Deterministic Lookup Table

- Contender 2: The Semantic LLM Classifier

- Head-to-Head Comparison

- The Verdict: The Waterfall Architecture

- References

The Month-End Bottleneck

One of the most persistent frictions in financial operations is the categorization of bank feeds and credit card transactions. For a Financial Controller, the integrity of the General Ledger (GL) relies on consistency. "Amazon Web Services" must always hit the Hosting/Infrastructure expense account, not Office Supplies.

Traditionally, automation in this space has been rigid. We build rules. If text contains "Uber", tag as "Travel". But vendor descriptors change, new SaaS tools appear monthly, and maintaining these rules becomes a job in itself.

Recently, the narrative has shifted to "Just let AI handle it." While Large Language Models (LLMs) are impressive, handing over your GL integrity to a probabilistic model feels risky. If the AI hallucinates, your P&L is wrong.

In this analysis, I break down the trade-offs between the traditional Deterministic Lookup Process and the modern Semantic LLM Classifier, helping you decide which architecture fits your risk tolerance and volume.

Contender 1: The Deterministic Lookup Table

This is the standard approach most of us are familiar with, often managed in Excel or, more robustly, in a database like Airtable.

How it works

In a tool like Make, the automation receives a transaction description (e.g., "AMZN Mktp US"). It queries a reference database for an exact match or a partial text match. If found, it returns the pre-assigned GL Code.

The Logic:IF [Description] CONTAINS "Adobe" THEN [GL_Code] = "6040 - Software Subs"

Pros:

- 100% Predictability: If the rule exists, the output is guaranteed. There is zero "drift."

- Auditability: You can point exactly to the record that caused a categorization.

- Cost: Extremely low. Standard database query operations are negligible in cost.

Cons:

- High Maintenance: This system is brittle. If a vendor changes their billing descriptor from "JIRA" to "Atlassian", the automation fails or categorizes it as "Uncategorized", requiring manual intervention.

- The "Long Tail" Problem: You can easily map your top 20 vendors, but the hundreds of one-off transactions (the long tail) remain unautomated because creating rules for them yields negative ROI.

Contender 2: The Semantic LLM Classifier

This approach swaps the lookup table for a prompt sent to an LLM via API (like OpenAI's GPT-4o or Anthropic's Claude).

How it works

The automation sends the transaction details and a simplified version of your Chart of Accounts to the model. The prompt asks the model to infer the correct category based on the context of the vendor name.

The Logic:Input: "Vercel Inc." | Context: "Web hosting platform" -> Inference: "6040 - Hosting/Infrastructure"

Pros:

- Handles Ambiguity: It knows that "Blue Bottle" and "Starbucks" are both coffee without needing two separate rules.

- Zero Maintenance: It adapts to new vendors instantly. You don't need to update a database when your marketing team buys a subscription to a new tool.

Cons:

- Non-Deterministic: There is a non-zero chance the model categorizes a large software purchase as "Office Supplies" depending on how it interprets the name. This introduces compliance risk.

- Latency & Cost: API calls to LLMs are slower and more expensive than database lookups, though prices are dropping rapidly.

Head-to-Head Comparison

| Feature | Deterministic Lookup | Semantic Classifier |

|---|---|---|

| Accuracy | 100% (on known vendors) | 95-99% (Variable) |

| Setup Time | High (Data entry required) | Low (Prompt engineering only) |

| Maintenance | High (Continuous updates) | Near Zero |

| Cost per Op | Negligible | Moderate |

| Best For | Recurring, high-volume vendors | One-off, long-tail SaaS |

| Risk | False Negatives | False Positives |

The Verdict: The Waterfall Architecture

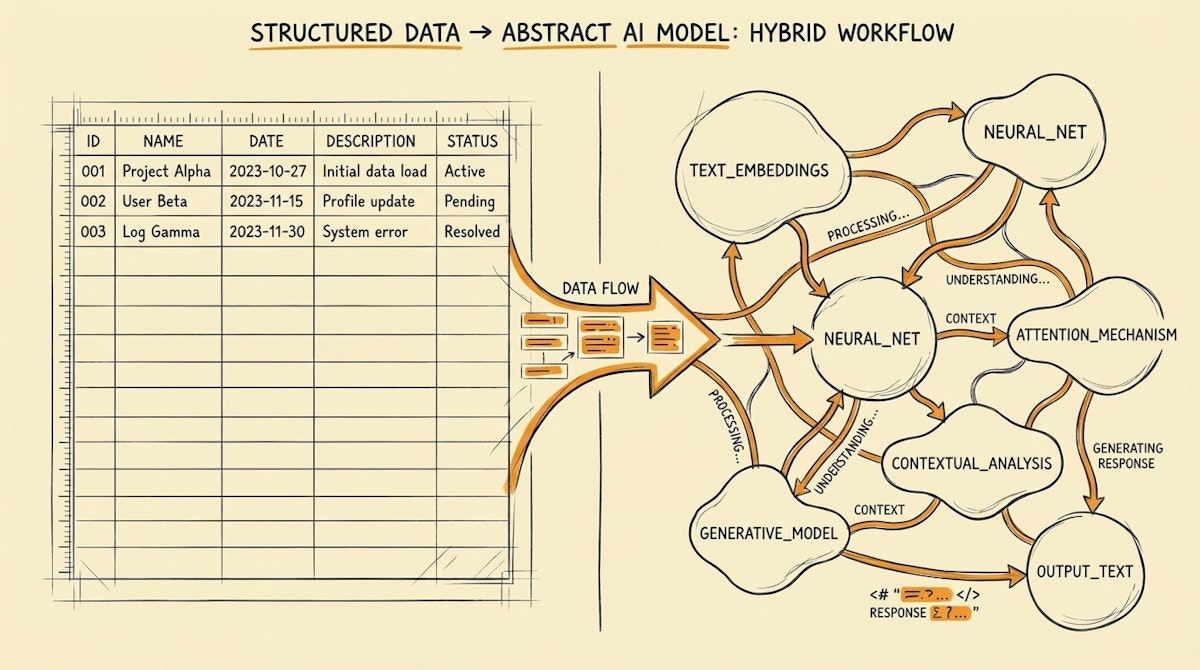

You should not choose one over the other. For a robust financial operations system, the best architecture is a Hybrid Waterfall.

We cannot tolerate hallucinations in financial data, but we also cannot afford to manually categorize every new $15 SaaS subscription.

The most effective pattern I have deployed follows this hierarchy:

- Step 1: The Exact Match. The automation first checks your trusted Airtable Master Data. If "Github" is found, apply the GL code. Stop.

- Step 2: The Semantic Fallback. If (and only if) no match is found, send the data to the LLM. Ask it to propose a category and a confidence score.

- Step 3: The Human Review. If the LLM confidence is high, provisionally tag it. If low, or if the amount exceeds a specific threshold (e.g., >$1,000), route it to a manual review view in Airtable for the Controller to approve.

This method ensures your high-volume, critical data remains 100% accurate (governed by rules), while the AI handles the messy long tail that usually eats up your Friday afternoons.