Forecasting the Shift from Deterministic Filters to Semantic Routing

The End of Hard-Coded Logic in Automation

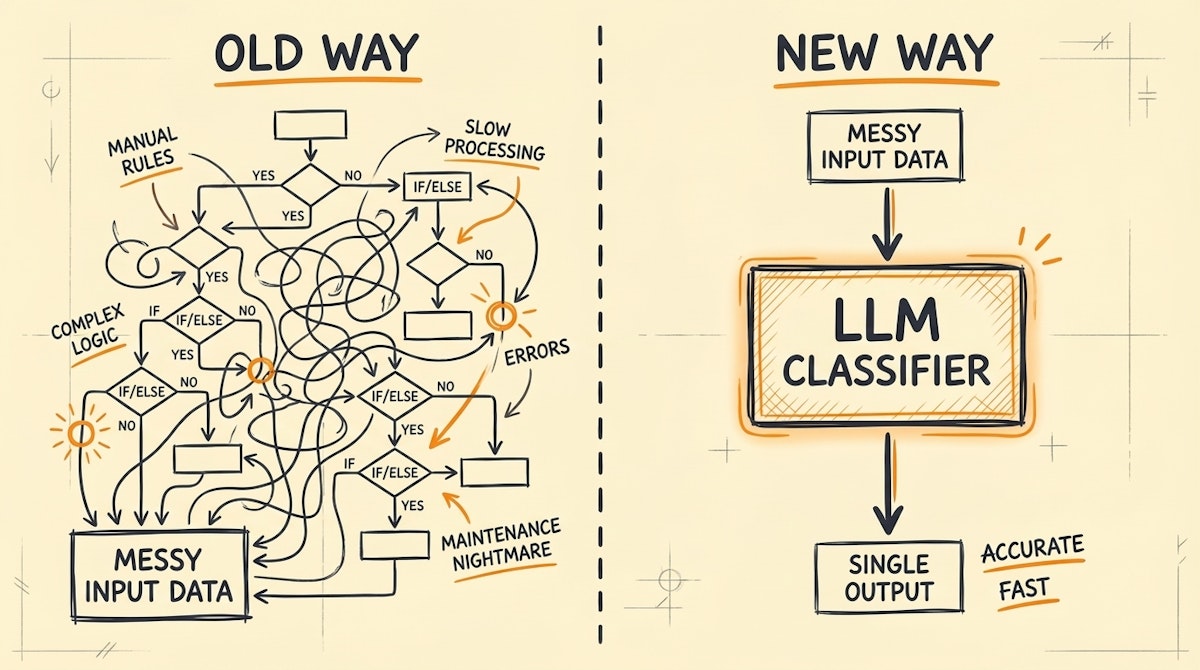

If you have spent any significant time inside Make or Zapier building high-volume workflows, you know the specific pain of the "Router" module. You build a complex path based on exact text matches—expecting a lead status to be exactly "Qualified" or a subject line to contain specific keywords. Then, a human upstream makes a typo, or a form field is updated without notice, and the entire automation fails silently or routes data down the wrong path.

For years, the constraint keeping us in this brittle state was cost. Using an LLM just to decide which path an automation should take was overkill. You don't spend $0.03 per execution just to check if an email is a sales inquiry or a support ticket. You write an "If/Else" condition and hope the data is clean.

However, the technological constraints have shifted drastically in the last six months. With the release of models like GPT-4o-mini and Claude 3 Haiku, the cost of "reasoning" has dropped to near zero, and latency is now comparable to standard API calls.

My forecast is simple: Over the next year, Growth Engineers will stop building complex, deterministic filter stacks and begin relying on The Soft-Logic Routing Process. We are moving from strict rule-based automation to probabilistic, semantic orchestration.

The Constraint That Changed: The Cost of Logic

Previously, we had to choose between dumb/cheap (Regex, exact match filters) and smart/expensive (GPT-4). There was no middle ground for high-volume operations.

Today, the math has inverted. The time it takes you to maintain and debug a 10-branch router in Make costs significantly more than the API fees to have a small, fast model handle the decision-making for you. The risk of "drift"—where data formats change over time—is mitigated because LLMs handle variance naturally.

The Soft-Logic Routing Process

This shift requires a change in how we architect our scenarios in Make. Instead of building logic into the structure of the scenario (the visual router branches), we move the logic into the prompt.

Here is how the architecture looks when you embrace this forecast.

1. The Consolidation Phase

In a traditional setup, you might trigger a workflow and immediately hit a router with five different filters. In the Soft-Logic process, you remove the initial router entirely. You ingest the raw data—messy email bodies, unformatted form responses, transcript dumps—directly into a variable.

2. The Semantic Classifier (The Brain)

This is the core replacement. You insert an LLM completion module (using a cheap, fast model) early in the workflow. Its only job is to classify the intent or state of the data.

The Prompt Structure:

- Role: You are a data router.

- Input: [Raw Data]

- Task: Analyze the input and categorize it into exactly one of these buckets: [Sales, Support, Hiring, Spam].

- Output: Return strictly the JSON object

{"category": "Selection"}.

Because these newer models are optimized for instruction following and JSON output, they rarely hallucinate on such narrow tasks.

3. The Simplified Switch

Now, your Make router becomes incredibly simple. You don't need complex AND/OR operators checking for keywords. You simply route based on the category variable returned by the LLM.

- Route A:

category= Sales - Route B:

category= Support

Why This Matters for Scaling

The immediate objection here is usually accuracy. "Code is 100% accurate; LLMs are probabilistic." While true in theory, in practice, hard-coded filters are often less accurate because they are intolerant of variance. If a lead comes in as "High Value" instead of "High-Value," a code-based filter misses it. A semantic router understands they are the same concept.

Adaptability

If you want to add a new category later, you don't need to surgically alter a dozen filter modules. You just update the prompt in one place. This reduces the "technical debt" of your automation significantly.

Resilience

This approach absorbs the chaos of real-world data. It allows your system to handle misspellings, slang, and unexpected formatting without breaking the workflow.

Conclusion

The most effective automations are often the ones that require the least maintenance. By shifting the routing burden from brittle, hard-coded logic to flexible, semantic reasoning, you trade a fraction of a cent per run for hours of regained engineering time. As model costs continue to race toward the bottom, the Soft-Logic Routing Process will become the standard for handling unstructured data at scale.